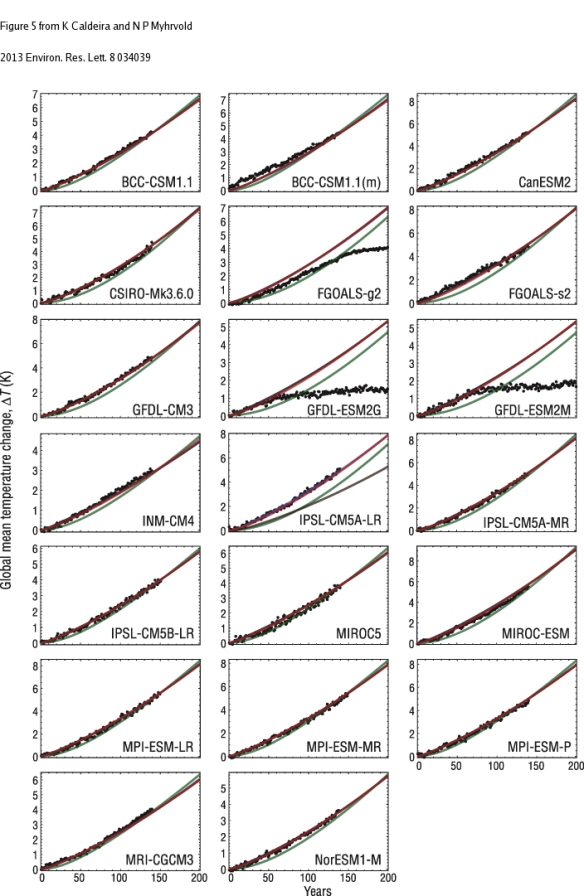

I’m going to give this topic another explanatory shot with some different graphics, because many still don’t grasp the serious problems inherent in trying to signal and noise from tree ring size. The most advanced method for attempting this is called Regional Curve Standardization, or RCS, in which ring size is averaged over a set of sampled trees, according to the rings’ biological age (i.e. ring number, counting from tree center), and then dividing each individual series by this average. I include five time series graphs, successively containing more information, to try to illustrate the problem. I don’t know that I can make it any clearer than this.

First, shown below are the hypothetical series of 11 trees sampled at a single sampling location.

Each black line shows the annual ring area progression for each of 11 trees having origin dates spaced exactly 10 years apart (the bold line is just the oldest tree of the group). By using ring area as the metric we automatically remove part of the non-climatic trend, which is the purely geometric (inverse quadratic) effect from each series. Any remaining variation is then entirely biological and it exhibits a very standard tree growth pattern, one in which growth rate increases to a maximum value reached relatively early life (here, around age 80 or so) and then declines more slowly toward a stable asymptote, which I fix at 1.0. Each tree’s trajectory occurs in a constant climate over the 300-400 year period measured.

The next figure adds two components:

First, the blue line represents a constantly increasing climatic parameter over time, say temperature, expressed as a ratio of its effect on ring size at year 0. Thus, at year 400, the cumulative climatic effect on ring area, regardless of biological age, is exactly 3-fold of its year zero value (scale at right). The second addition is the series of red lines, which simply represent those same 11 trees’ growth trajectories growing under this climate trend. The climatic effect on growth is a super simple linear ramp in all cases–I am not invoking any kind of problematic, complex growth response (e.g. “divergence”), or any other complication. Thus, by definition, if we divide the two corresponding ring series for each tree, we get exactly the blue line, in all cases.

In the third figure:

I add a green line–this is the estimated RCS curve, computed the standard way (by aligning each tree according to its biological age and then averaging the ring sizes over all trees). This RCS curve is thus the estimated non-climatic ring size variation, which we accordingly remove from each tree by dividing the red growth series by it. Finally, we average the resulting 11 index series, over each of the 400 years, giving the stated goal: the estimated climatic time series.

It is at first glance entirely clear that the green RCS curve does not even come close to matching any of the black curves representing the true non-climatic variation…which it must. According to standard dendroclimatological practice we would now divide the 11 red curves by this green RCS curve–which is thereby guaranteed not to return the true climatic signal. So what will it return?

It returns the orange line shown above. No that’s not a mistake: it will return an estimated climatic trend of zero.

And this is the entire point–the supposedly most advanced tree ring detrending method is fully incapable of returning the real climatic trend when one exists. Note that I’m keeping everything very simple here–this result does not depend on: (1) either the direction or magnitude of the true trend, or (2) the magnitude, or shape, of the non-climatic trend in the sampled trees (including no such whatsoever). That is, this type or magnitude of result is not specific to the situation I set up. The problem can be reduced, but never eliminated, by increasing the variance in tree ages in the sample. But since standard field sampling practice is to sample the oldest possible trees at a site, this is very rare, a fact which the data of the International Tree Ring Database (ITRDB) shows clearly–which is ironic given that Keith Briffa and Ed Cook mentioned the importance of exactly this issue in a white paper available at the ITRDB site.

Lastly, suppose now that the last usable year for all ring series occurred a few decades ago. This will occur, for example, due to many ITRDB field samples being collected decades ago now, or for any perceived problems in the climate-to-ring response calibration function, which is must be stable and dependable (notably, the “divergence” effect, in which linear relationships between climate and ring size break down, badly). What will be the result of eliminating, say, the last five decades of data, and replace them with instrumental data? Well, you will then get exactly this:

Look familiar? Does that look like anything remotely approaching success to you? Again, I have not even broached other possibly confounding problems, such as co-varying growth determinants (e.g. increasing CO2- or N-fertilization, changing soil moistures, or inter-tree competition), nor non-linear responses in the calibration function, nor any of the thorny issues in large-scale sampling strategies, reconstructions and their corresponding data analysis methods. Those things would all exacerbate the problem, not improve it. It’s a total analytical mess–beginning and end of story.

I can’t make it any clearer than this. And yes I have the R code that generated these data if you want to see it.